Created by mathematicians and machine learning experts from

the University of Cambridge, Darktrace’s Enterprise Immune System uses AI

algorithms that mimic the human immune system to defend enterprise networks of

all types and sizes.

OpenGov recently spoke to Sanjay Aurora, MD, Asia Pacific at Darktrace, about how cyber-attacks are evolving and how artificial intelligence can help defend against increasingly sophisticated attacks.

A new era of low and

slow cyberattacks

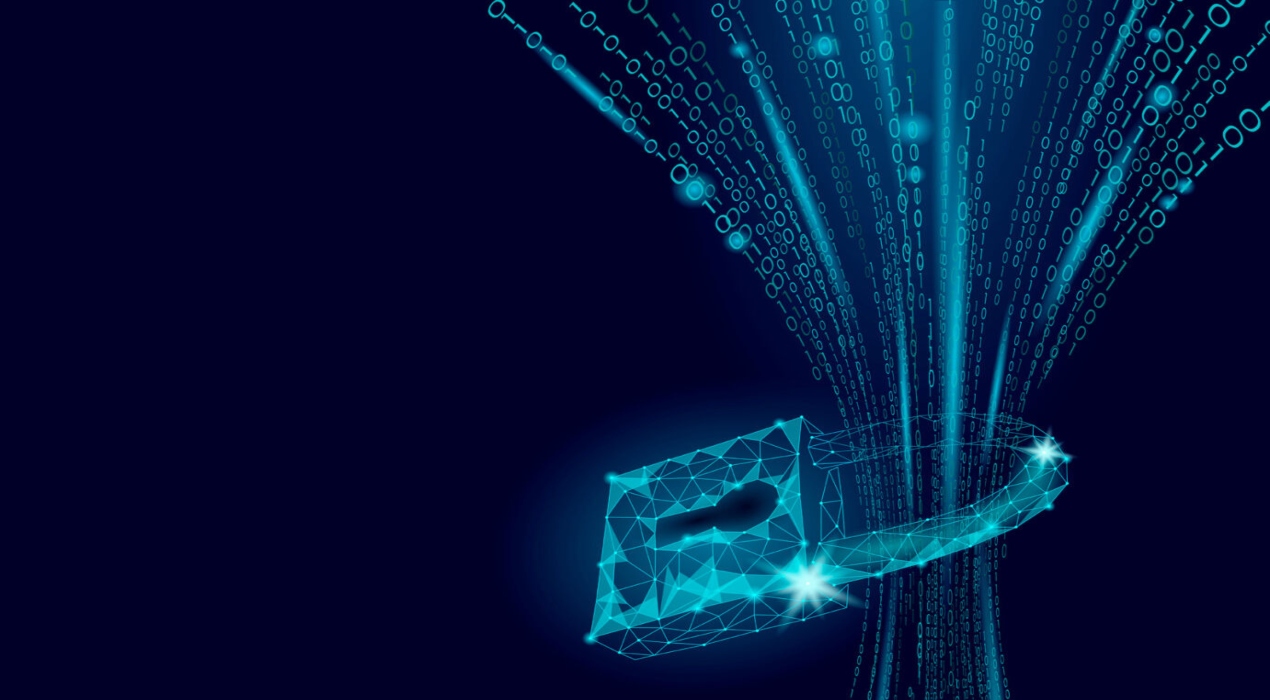

Today cyber-attacks make headlines all the time. But these

are not the cyber-attacks of 15 -20 years ago, defacing websites, stealing

credit card information. Cyber-criminals are now doing significantly more

sophisticated and stealthy, low and slow pointed attacks.

Mr. Aurora said, “You look at the DNC email hack in the US.

They are still talking about was there an attack, was there an attempt. Their

trust in the information is getting lost. This is the new generation of

cyberattacks that we are dealing with. People and organisations, from the

largest of the large to the smallest, are unable to trust information.”

He gave another example of a client, a laboratory in

Australia, which deals with a lot of confidential information about patients. They

are worried about somebody stealing that information. But they are even more

worried about somebody coming in without getting caught and tweaking some of

the information, so that the company cannot understand what is real and what is

fake. These low and slow pointed attacks reside in organisations for a long

time. The attackers are not going after organisations randomly. Sometimes, they

are after a particular piece of information from a specific organisation.

“But organisations, unfortunately, still rely on finding

‘good’ and ‘bad’. They build walls. Like how did you enter this building? You

showed your ID to the gatekeeper, the gatekeeper checked your ID, gave you a

pass. You tap the pass and you are in,” Mr. Aurora said.

He continued, “Or I could have tapped you in. then I would

have become an insider threat. Cyber security till recently was heavily relying

on rules and signatures, on locks and walls. Whereas the attackers are using so

many mechanisms to get inside. If you have the tallest of firewalls, attackers

will get even higher ladders. Notwithstanding policies, compliance and

training, all of us sitting here, are insider threats to our respective

organisations.”

Other than the reliance on locks and walls, the second issue

is the lack of visibility. Today everything connected with an IP is a

point-of-entry. It could be a printer, the audio/ video conference facilities

residing in the corporate boardrooms or even a connected coffee machine. Any of

these could be an easy point of entry. And they are not even on the radar of

many organisations.

Defining the normal

to detect the abnormal

Mr. Aurora compared an organisation to the human body. The human

body is being attacked by unknown unknowns every second. Yet, we have thrived

and survived for millions of years. The skin is our firewall. There are still

things that get in. The body’s immune system reacts and fights by firstly

understanding what is normal and detecting what is abnormal on the basis of

that.

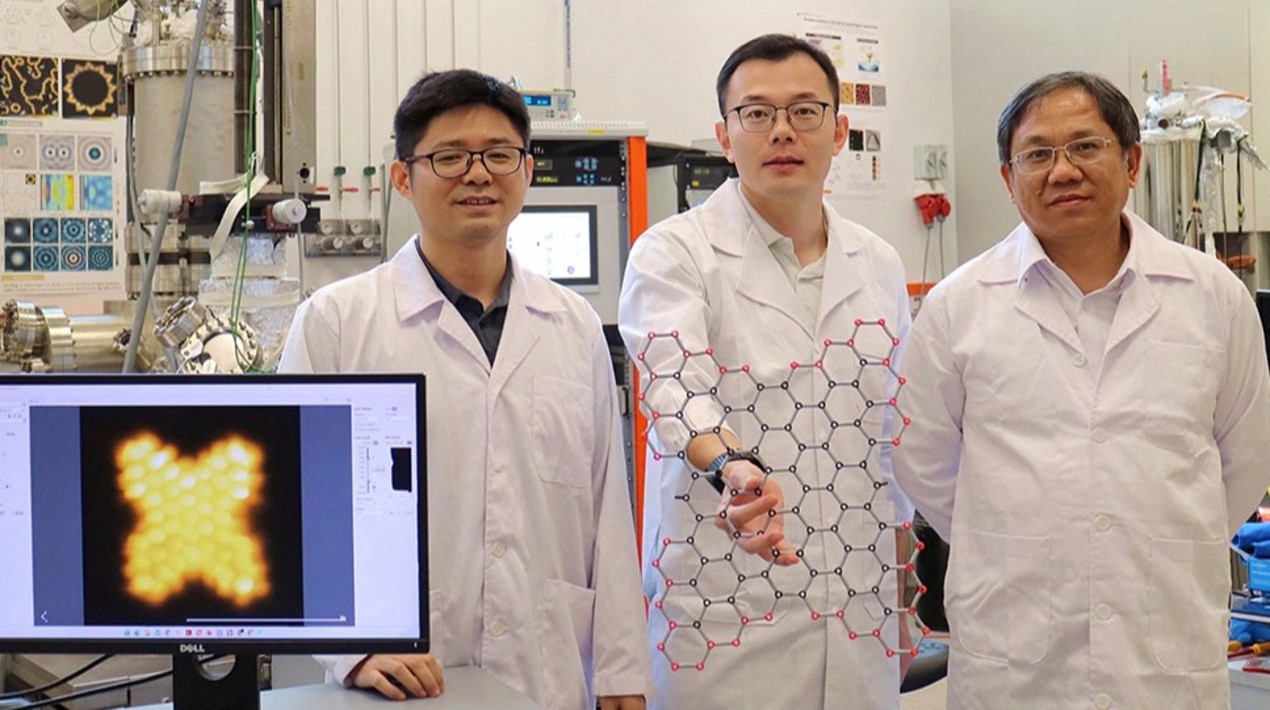

“Around four years ago Cambridge University’s mathematicians

came up with a concept largely based on this principle. If the human body can

understand and fight back autonomously, why would an organisation not be able

to do it? Because there is information. The information is in the data. By

using mathematics and unsupervised machine learning, if you are able to

establish a pattern of life, then anything abnormal which disturbs that normal

pattern will be detected,” explained Mr. Aurora.

The abnormal could be a device talking to a certain server

at 2 in the morning, which it has never done before. Or a user downloading huge

amounts of information which he has never been done before. It is a very subtle

change in the behaviour of a device, a user or a network. That user or the

device have not broken any rules. Using only rules, an organisation would not

be able to detect that anomalous behaviour.

The organisation can mathematically deduce that if this is

happening here, and the other parts of the network are doing this, this anomaly

is a leading indicator (Mr. Aurora stressed that leading indicator is key here)

of a much larger problem that might be brewing in the organisation. This approach

allows organisations to take proactive measures.

‘The battle at the

border is over’

Sitting inside the network and using the principle of the

immune system, Darktrace establishes a pattern of life, within a week. We asked

if the system is previously trained on datasets.

Mr. Aurora replied, “We don’t even tell the system if this

is a bank or a law firm or a government entity. Because the moment you start

putting some rules, it starts with presumptions and the learning goes learn

wrong. Every entity operates differently. Even a Bank A will be different from

a Bank B.”

Within a week the system understands how the organisation

works, how users and devices behave and is able to alert the organisation to anything

abnormal that they should investigate.

Frequently, it is found that most of the large attacks we

read about, are the culmination of several leading indicators that existed for

a long time. For instance, employees using VPN to hide their activity, such as

shopping or browsing prohibited websites pose a naive insider threat.

Unknowingly they present massive risks.

Mr. Aurora said, “That is a result of these leading

indicators which are already present in the organisation and were going

unnoticed.”

“The battle at the border is over. You cannot defend the

border any more. The real battle is inside. The battle now is how do you deduce

from the leading indicators and pro-actively stop them early in the tracks

before they become headlines.”

The system gets wiser as it processes more and more traffic.

This is being taken a step further now. Machines are autonomously responding to

threats. Deducing the real issue from the threats, the machines are able to

take very precise action, like slowing down the progress, or allowing the human

to intervene and giving that little extra time to stop the threat. This is

particularly handy for fast moving threats like ransomware. During the WannaCry

attack in May this year, Darktrace’s Enterprise Immune System successfully detected and

contained the attacks for a number of its customers, including an NHS

(National Health Service) agency.

This is accomplished by Darktrace’s Antigena solution. Its autonomous

response capability allows organisations to directly fight back, and networks

to self-defend against specific threats, without any disruptions.

Mr. Aurora said, “This is a cyber arms race. You cannot

fight those machine or AI led attacks using conventional security teams, who

will raise a ticket and try to understand from logs as to what is going on. Traditional

security people are like firefighters. They solve problems. We have shifted the

paradigm. Instead of just firefighting the known issues, you discover the

unknown issues and take action.”