When in the learning process, humans are nurtured by teachers or mentors to acquire knowledge more quickly. Students can grasp when the teacher showed a good or substandard example. Additionally, they can only imitate the teacher’s actions precisely if they exert greater effort to achieve the same level of proficiency. Just like humans, computer scientists can also use “teacher” systems to train another machine to complete a task.

Researchers from MIT and Technion, the Israel Institute of Technology, have embarked on developing an algorithm that automatically and independently determines when the student should mimic the teacher (imitation learning) and when it should learn through trial and error (reinforcement learning). The researchers made the machine learn from other machines without a third party anymore to teach, causing it to save a lot of time and energy.

Several current approaches attempting to find a middle ground between imitation learning and reinforcement learning often rely on a laborious process of brute force trial-and-error. Researchers select a weighted blend of the two learning methods, execute the entire training procedure, and iterate the process multiple times to discover the optimal balance. However, this approach is inefficient and often incurs substantial computational costs, rendering it impractical in many cases.

When the researchers embarked on their simulation, it was proved that the combination of trial and error learning allowed students to learn faster and more efficiently than the imitating methodology, as the student can explore more in many ways. By simulating the combination methodology, which uses the experiment and exploration by the student itself, students can combine dots that intersect to produce a comprehensive conclusion.

“Integrating trial-and-error learning and following a teacher yields a remarkable synergy. It grants our algorithm the capability to tackle highly challenging tasks that cannot be effectively addressed by employing either approach independently,” said Idan Shenfeld, an electrical engineering and computer science (EECS) graduate student and Lead Author of a paper on this technique.

The innovative approach enables the student machine to deviate from imitating the teacher’s behaviour when the teacher’s performance is either good or not good. However, the student can later revert to mimicking the teacher’s actions during the training process if it proves to be more beneficial, leading to improved outcomes and accelerated learning.

The proposed approach entails training two separate students. The first student is taught using a combination of reinforcement learning and imitation learning, with the learning process being guided by various techniques.

On the other hand, the second student is trained solely using reinforcement learning, relying exclusively on this approach to learn the same task, minimising the need for extensive parameter adjustments, and delivering exceptional performance.

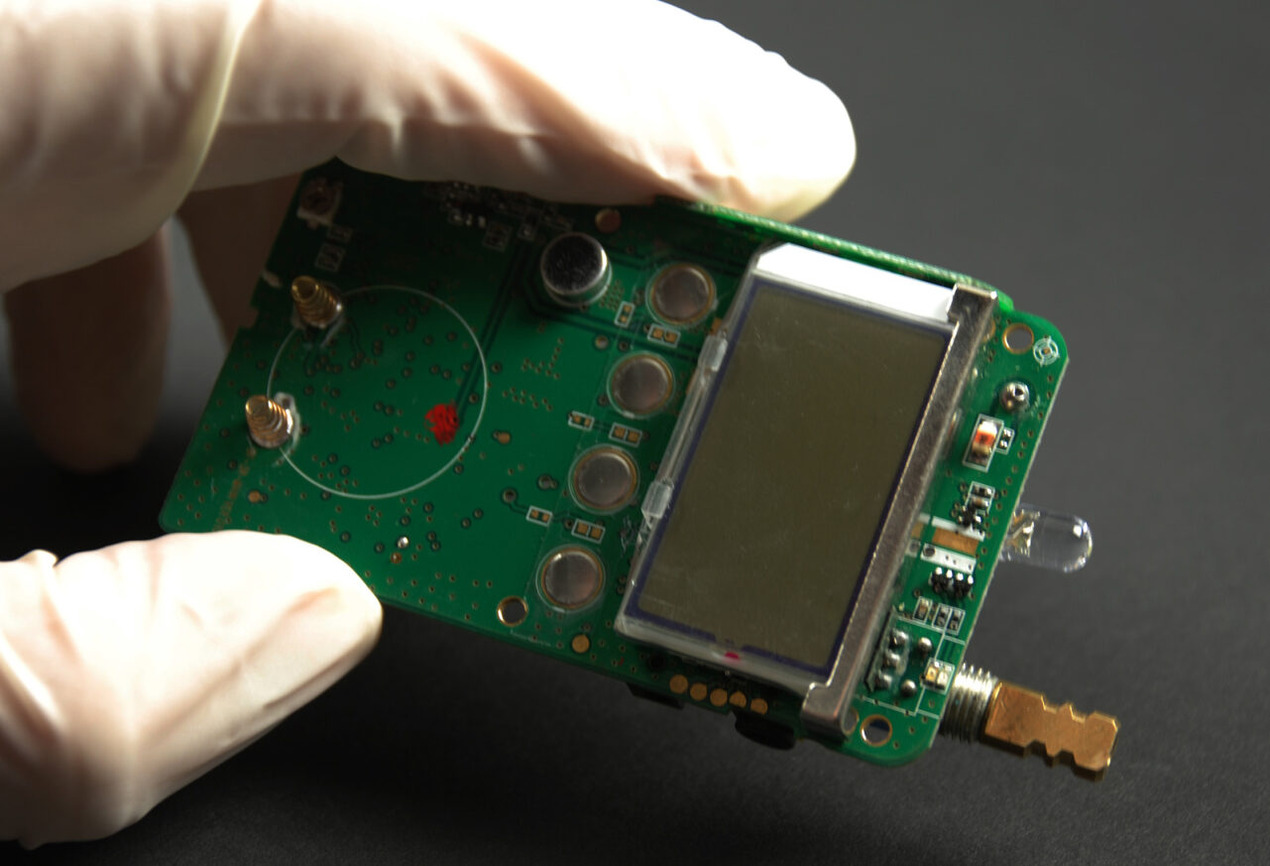

To give their algorithm an even more difficult test, a simulated environment was established, involving a robotic hand equipped with touch sensors but without visual perception. The objective was to reorient the pen to the correct position. The teacher solely accessed real-time orientation data, while the student relied on touch sensors to determine the pen’s orientation.

Rishabh Agarwal, Director of a private research laboratory in the US and an assistant professor in the Computer Science and Artificial Intelligence Laboratory underscores that the ability to reorient objects is just one example of the various manipulation tasks that a future household robot would be required to accomplish. “This research introduces a compelling method, leveraging previous computational efforts in reinforcement learning.”

“I am very optimist about future possibilities of applying this work to ease our life with tactile sensing,” Abhishek Gupta, an Assistant Professor at the University of Washington, concluded.