MIT researchers have developed an augmented reality visor that can aid in discovering concealed objects. The system uses radio frequency (RF) waves in conjunction with computer vision to locate a specific item covered. The customers are then directed to the hidden piece, stored in a box or beneath a mound.

The AR device uses RFID tag signals to find hidden deep things. The labelled tag will reflect signals from an RF antenna attached to the computer vision equipment. The AR device can see the object in everyday materials like cardboard boxes, plastic containers, or wooden dividers.

The headset takes the wearer across a room to the item’s location, a transparent sphere in the augmented reality (AR) interface. The X-AR headset certifies that the user has picked up the correct object once the item is in their grasp.

“Our entire goal with this project was to build an augmented reality platform that helps you to see things that are unnoticeable — things that are in boxes or around corners — and in doing so, it can navigate you toward them and truly make it possible for you to see the physical world in ways that were not possible before,” said Fadel Adib, an Associate Professor in the Department of Electrical Engineering and Computer Science, the Director of the Signal Kinetics group in the Media Lab, and the project’s principal investigator.

Develop the headset

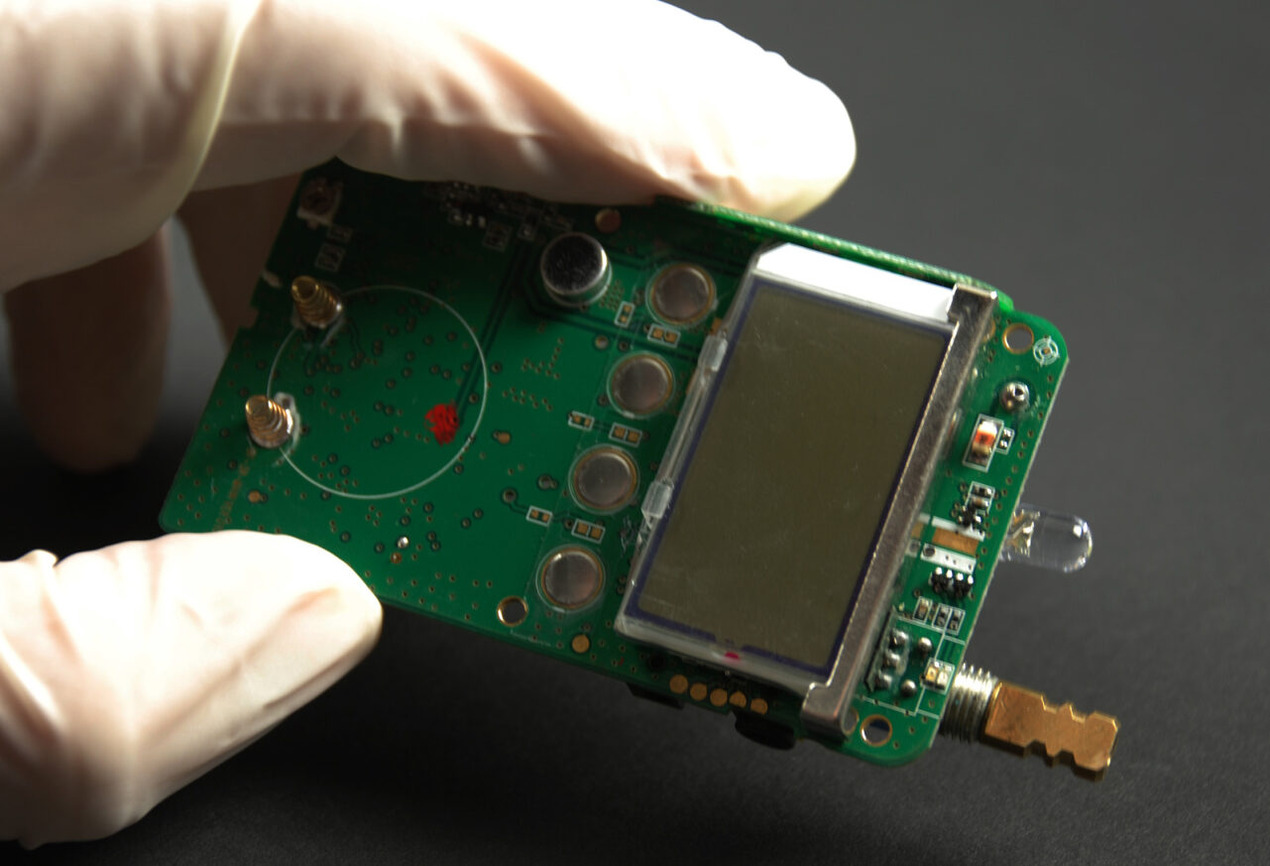

To develop an augmented reality headset with X-ray vision, the researchers had to first gear an existing headset with an antenna capable of communicating with RFID-tagged goods. The researchers modified a lightweight loop antenna to transmit and receive signals when mounted to the headset’s visor. After constructing a functional antenna, the team deployed it to locate RFID-tagged goods.

“One huge issue was creating an antenna that would fit atop the headgear without obscuring any cameras or hindering its functions. This is critical because we need to use all of the specs on the visor,” Eid explained.

They used synthetic aperture radar (SAR), a technology similar to how aeroplanes view objects on the ground. X-AR creates a map of the surroundings and determines its placement using visual input from the headset’s self-tracking functionality. Once X-AR has located the item, and the user picks it up, the headset must confirm that the user has picked up the correct thing.

From the warehouse to the assembly line

X-AR might help e-commerce warehouse workers find products quickly on congested shelves or buried in boxes, or it could identify the exact item for an order when numerous similar objects are in the same bin. It might be used at a factory to assist technicians in locating the necessary pieces to construct a product.

To put X-AR to the test, the researchers built a virtual warehouse out of cardboard boxes and plastic bins and then filled the products with RFID-tagged. They discovered that X-AR could guide the user toward a targeted item. Users can find the article in less than 10 centimetres from where X-AR steered the user on average. The baseline methods studied by the researchers exhibited a median inaccuracy of 25 to 35 cm.

They also discovered that it accurately checked that the user had selected the correct item 98.9% of the time. Even when the thing was still in its box, it was 91.9% accurate.

After demonstrating the success of X-AR, the researchers intend to investigate how alternative sensing modalities, such as WiFi, mmWave technology, or terahertz radiation, might be used to improve its viewing and interaction capabilities. They could also improve the antenna’s range beyond 3 metres, allowing the device to be used by numerous synchronised headsets.