404 Error

Page Not Found

Sorry the page you were looking for cannot be found. Try searching for the best match or browse the links below:

Latest Articles

Recommended Stories

Innovating for Impact: Singapore’s Biomedical and Tech Leadership

Alita Sharon

April 26, 2024

India Advancing IP Awareness to Foster Innovation

Samaya Dharmaraj

April 26, 2024

Indonesia Trials Administration Services Portal

Azizah Saffa

April 26, 2024

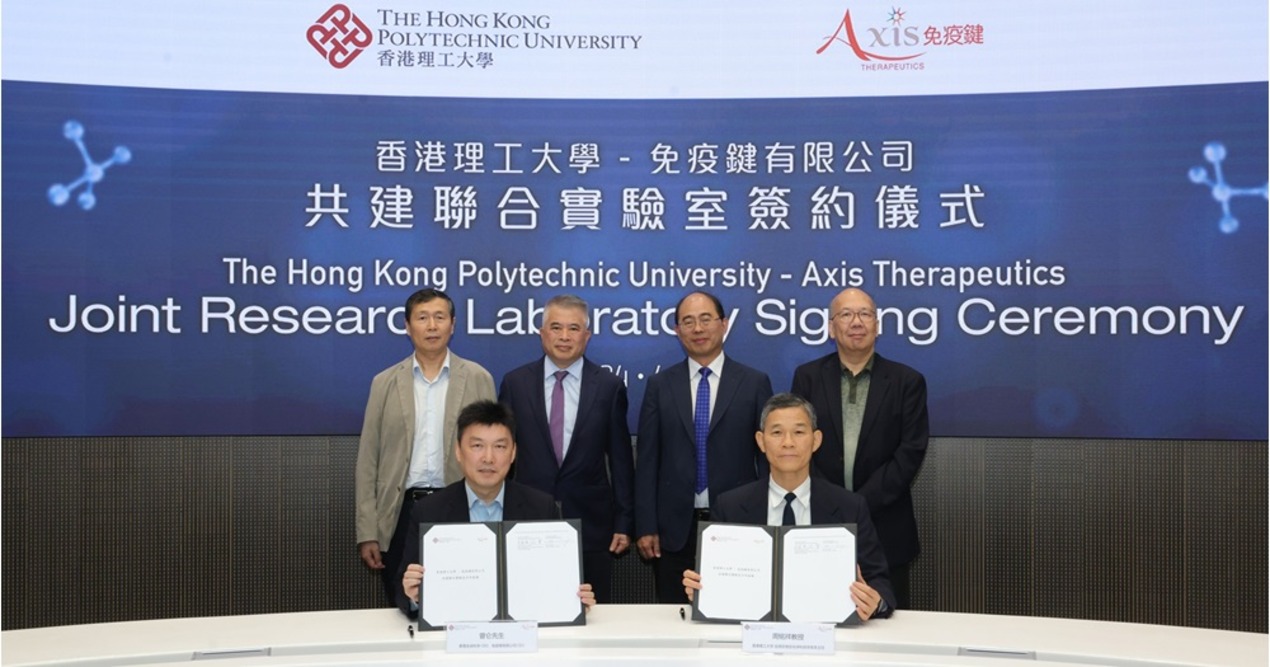

New PolyU Centre to Enhance Immunotherapy Tech

Alita Sharon

April 26, 2024

Vietnam Pushing for an Inclusive Digital Economy

Samaya Dharmaraj

April 26, 2024

Ransomware Resilience: Navigating Threats in the U.S. Financial Sector

Azizah Saffa

April 26, 2024

CityUHK Launches Institute of Digital Medicine

Alita Sharon

April 26, 2024