|

Getting your Trinity Audio player ready...

|

In a recent publication, computer scientists from the National Institute of Standards and Technology (NIST) and their collaborators shed light on the vulnerabilities of artificial intelligence (AI) and machine learning (ML) systems, emphasising the potential for deliberate manipulation or “poisoning” by adversaries. The work, titled “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations (NIST.AI.100-2),” is part of NIST’s broader initiative to foster the development of trustworthy AI.

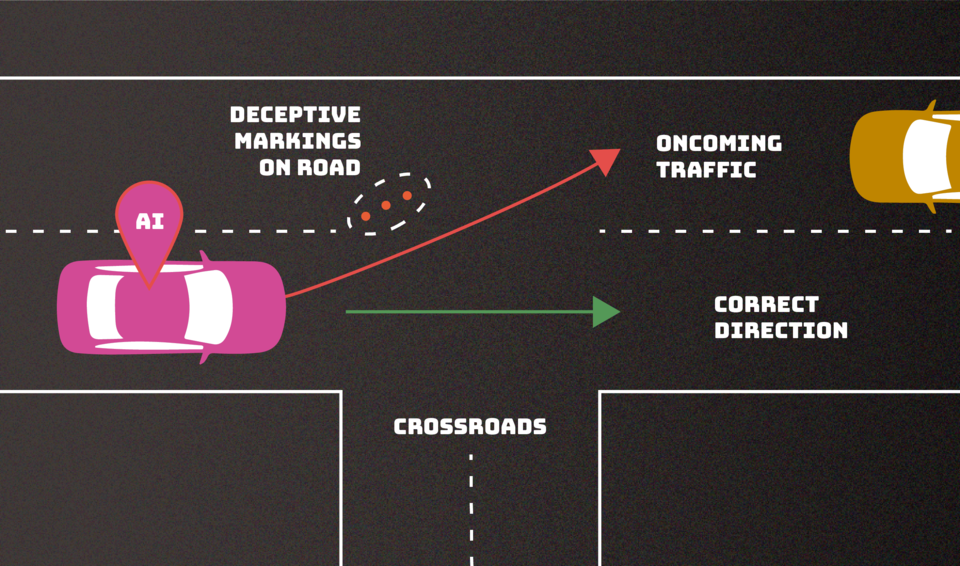

The researchers acknowledged that AI systems have become integral to modern society, performing tasks ranging from autonomous driving to medical diagnosis and customer interactions via online chatbots. These systems rely on extensive training datasets to learn and predict outcomes in various situations. However, a critical issue arises from the questionable trustworthiness of the data itself, sourced from websites and public interactions. Bad actors can exploit this vulnerability during the training phase and subsequent interactions, leading AI systems to exhibit undesirable behaviours.

One notable concern highlighted in the publication is the lack of foolproof defence mechanisms to protect AI systems from misdirection. The sheer size of training datasets makes it challenging for developers to effectively monitor and filter content, leaving room for malicious actors to corrupt the data. For instance, chatbots may inadvertently learn to respond with abusive or racist language when exposed to carefully crafted malicious prompts.

The report identified four major types of attacks on AI systems: evasion, poisoning, privacy, and abuse attacks. Evasion attacks occur after deployment and aim to alter inputs to influence system responses. Poisoning attacks, which occur during training, involve introducing corrupted data to the system. Privacy attacks target sensitive information about the AI or its training data during deployment. Abuse attacks entail inserting incorrect information into legitimate sources for the AI to absorb.

The authors classified these attacks based on criteria such as the attacker’s goals, capabilities, and knowledge. They stressed the importance of understanding these attack vectors and their potential consequences, as well as the limitations of existing defences. The report offers an overview of the various attacks AI products might face and proposes corresponding approaches to minimise the damage.

The publication underscored the challenges associated with securing AI systems, especially given the theoretical problems that remain unsolved. Despite the significant progress in AI and ML technologies, the authors caution that these systems are susceptible to attacks with potentially severe consequences. They emphasised the need for heightened awareness among developers and organisations deploying AI technology, noting that existing defences against adversarial attacks are incomplete at best.

Further, based on previous reports from OpenGov, the U.S. has also taken strides to tackle the deep-fake challenge. In response to the escalating threat of deepfakes, the National Security Agency (NSA) and its federal partners, including the Federal Bureau of Investigation (FBI) and the Cybersecurity and Infrastructure Security Agency (CISA), have issued new guidance aimed at addressing cybersecurity risks posed by synthetic media.

Deepfakes, a type of manipulated or artificially created multimedia content using machine learning and deep learning technologies, present significant challenges for National Security Systems (NSS), the Department of Defence (DoD), and organisations within the Defence Industrial Base (DIB).

The collaborative effort has resulted in the publication of a Cybersecurity Information Sheet (CSI) titled “Contextualising Deepfake Threats to Organisations.” The document serves as a comprehensive guide for entities to recognise, safeguard against, and respond to deepfake threats effectively. The term “deepfake” encompasses a broad range of synthetically generated or altered media, including “shallow/cheap fakes,” “generative AI,” and “computer-generated imagery (CGI).”

The joint CSI offers practical recommendations for organisations to counter synthetic media threats, particularly deepfakes. One key suggestion involves the adoption of real-time verification capabilities, enabling swift identification and response to potential instances of fake content. Passive detection techniques are also emphasised for ongoing monitoring and early detection. The guidance stresses the importance of safeguarding high-priority officers and their communications, as they are frequent targets of deepfake attempts.

The NIST publication serves as a comprehensive guide for AI developers and users, offering insights into potential threats and mitigation strategies. It encourages the community to contribute to the development of more robust defences against adversarial attacks on AI systems, recognising that a one-size-fits-all solution does not currently exist in this evolving landscape.